AVR Testing

The MDS development environment contains automated visual regression tests built on the BackstopJS testing framework.

Overview

BackstopJS is an automated visual regression (AVR) testing framework capable of capturing and comparing screenshots using various browser engines and platforms. AVR tests are run by taking screenshots of a component test page. These test pages contain examples that represent nearly every permutation of component property.

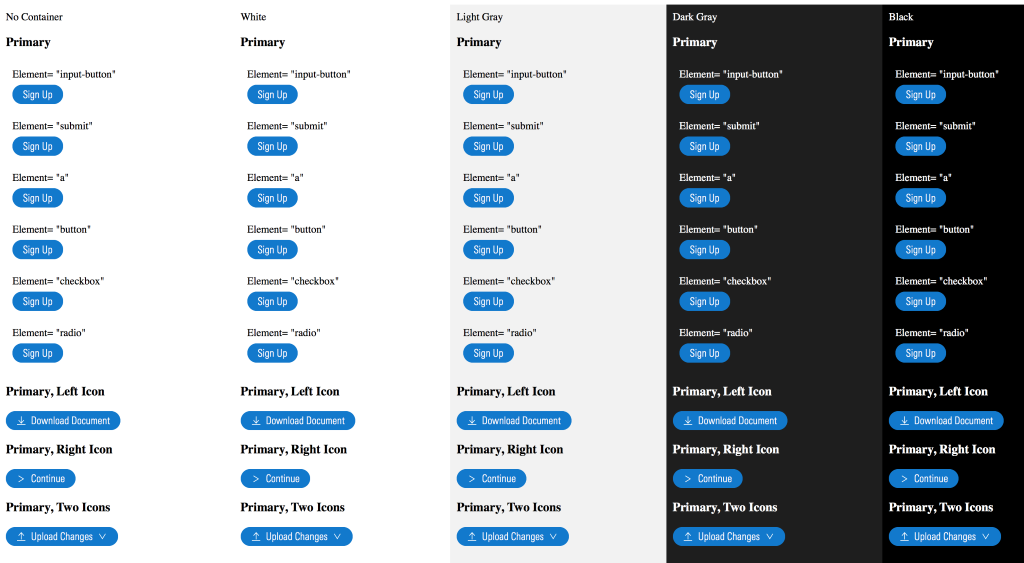

Test page for the Buttons component.

Test page for the Buttons component.

Testing Requirements

- BackstopJS version

3.5.10or higher. - Docker Community Edition (Free) version

17.12or higher. You must install Docker prior to running the tests. Docker is a “headless virtual machine” that spins up while the tests are running and then exits after the tests have finished. Docker ensures tests are run in an identical environment regardless of who is running the tests.

When to Test

- Before submitting a pull request to the

developbranch in thelibrarydirectory. - Prior to each release for quality assurance.

Creating Tests

When building a new component, see the building a component page for detailed instructions and terminal commands for generating all of the starter files you will need. One of these files will be a test page where you will create your AVR tests, located at:

library/test/visual/test-pages/components/[new-component].njk

To create a test, wrap sections of the component test page with a special nunjucks macro:

{% call test_row() %}

<h2 class="mds-test__header">Default</h2>

{{ library.button() }}

{% endcall %}Everything within the test_row() call will be captured as a new image and used to create a new test.

Running Tests

The testing functionality is exposed via npm run commands:

AVR terminal.

AVR terminal.

npm run avr— Runs the tests by initiating the AVR CLI. Follow the prompts indicated in the CLI to configure your AVR test run. There are three different types of AVR tests.- Baseline — Compares against the baseline HTML/CSS images stored under the

librarydirectory. These tests run against the HTML/CSS “individual” test pages. This is the most common use of AVR. - MDSWC Comparisons — Compares the MDSWC web components against the “individual” HTML/CSS. This ensures the web components and the HTML/CSS are visually indistinguishable from each other.

- Indivdual vs Library Comparisons — By default, test pages only include individual

component.css. This visual diffing test copies each of those “individual” test pages and creates a new version that contains the entire library,mds.css. The “individual” and “library” versions of the test page are compared to ensure there are no issues when the entire CSS library is loaded on the page.

- Baseline — Compares against the baseline HTML/CSS images stored under the

npm run avr:approve-new-baseline— Promotes the latest test images as the new baseline for subsequent tests.

Run npm run avr from the command line to run tests. Testing can take several minutes depending on the speed of your computer. Once a test is complete, the results will display in your browser.

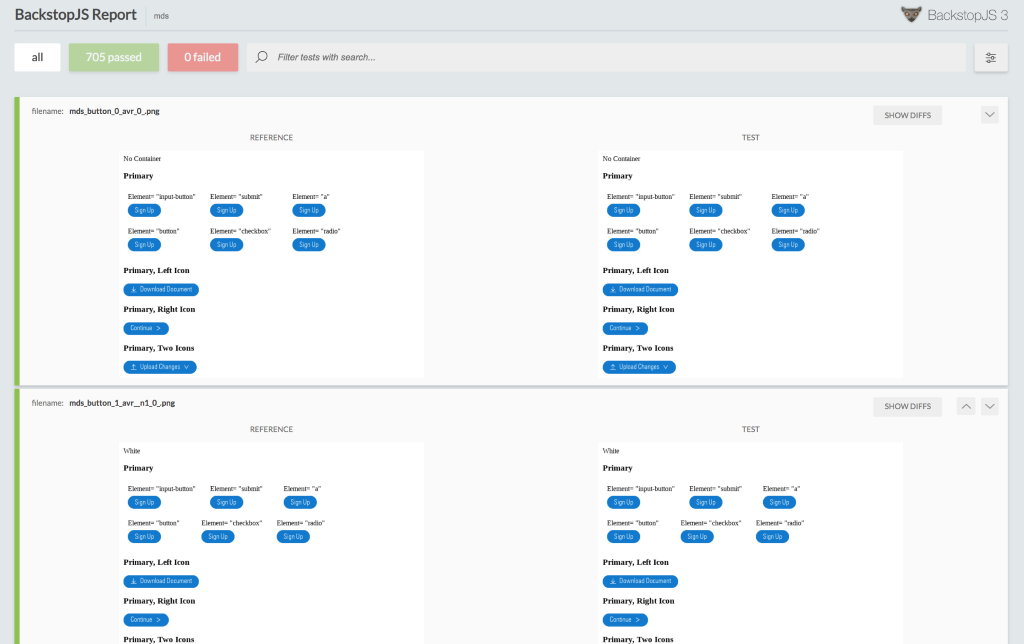

Test report for passed tests.

Test report for passed tests.

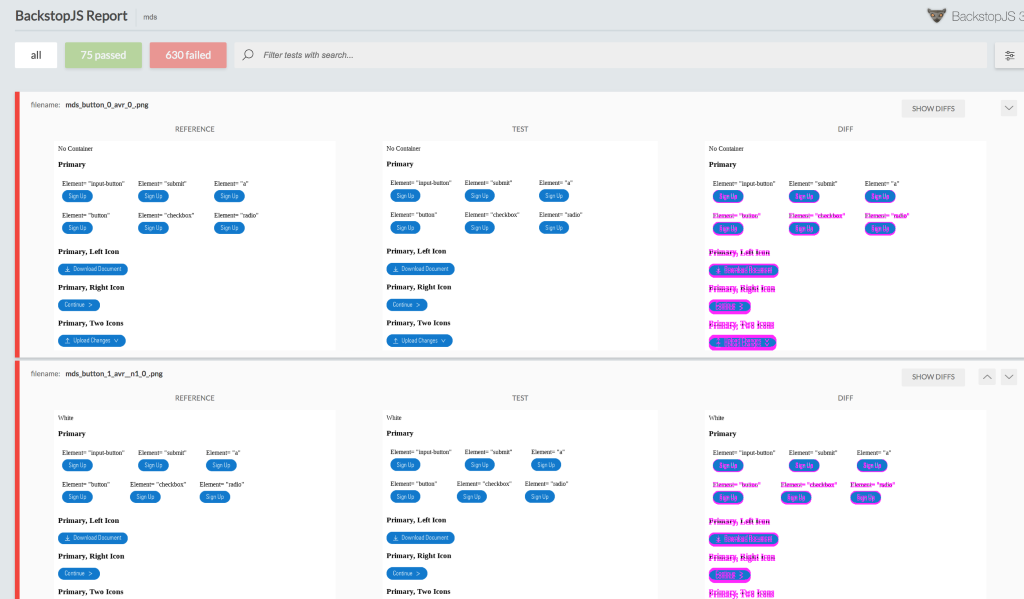

Test report for failed tests.

Test report for failed tests.

Assessing “Failed” Tests

A visual diffing test can “fail” in two ways:

- Expected failures serve as confirmation that changes made to MDS source code altered a component’s display in an expected manner.

- Unexpected failures reveal unintended changes incompatible with your intent. If a test reveals unexpected changes, correct the code and rerun the test until you experience no incompatibilities.

Promoting Expected Differences

Run npm run avr:approve-new-baseline to promote the latest test’s screenshots as the new baseline screenshots for future tests. This tells the testing framework, “These changes are approved. Don’t flag them as failures anymore.” Commit the new baseline images to the MDS repository along with the code changes that triggered the visual difference.